Ready to get a selling / advertising / branding website?

- Predictable

- Systemic

- Stable

Sales

via the Internet

.webp)

A few years ago, SEO was predictable. It was enough to choose keywords, create relevant content, optimize the page, and you had every chance of getting to the top of Google’s search results. But 2025 changed the rules of the game. AI Mode, a new search mode, appeared on the scene, and traditional SEO was on the verge of extinction. And it’s not just an algorithm upgrade anymore. It is a fundamental transformation of the very concept of search.

Table of Contents

Last month at SEO Week, during his keynote “The Brave New World of SEO,” Michael King reiterated that yes, there is a significant overlap between organic results and AI Overviews. But we are not ready for what will happen when memory, personalization, MCP (Multisearch Central Protocol), and mandatory agent capabilities are added to the mix. With the announcement of new features in AI Mode, everything Michael King talked about is already part of the Google search experience – or will be by the end of the year.

In this article, we will analyze:

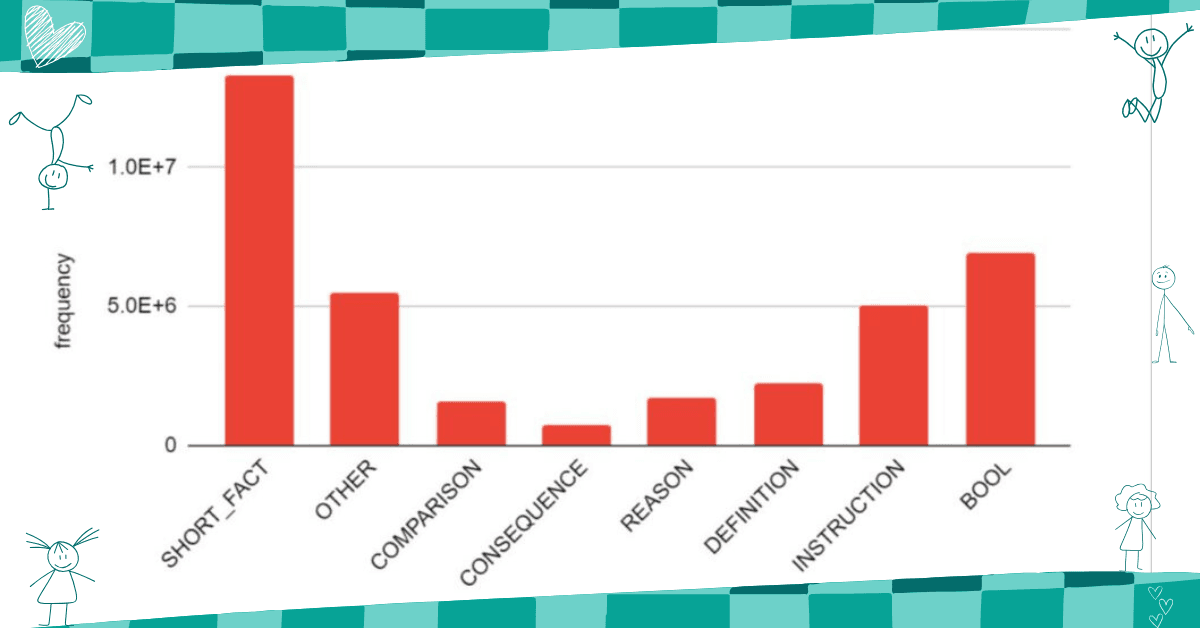

AI Mode is not just another Google feature. It’s a completely new mode in which search stops being a list of links and turns into a generalized, logically constructed answer. You open Google and ask a question, but what happens next is nothing like a normal search. There are no blue underlines. Instead, it’s a friendly, context-sensitive paragraph that answers even the questions you haven’t even had a chance to ask yet.

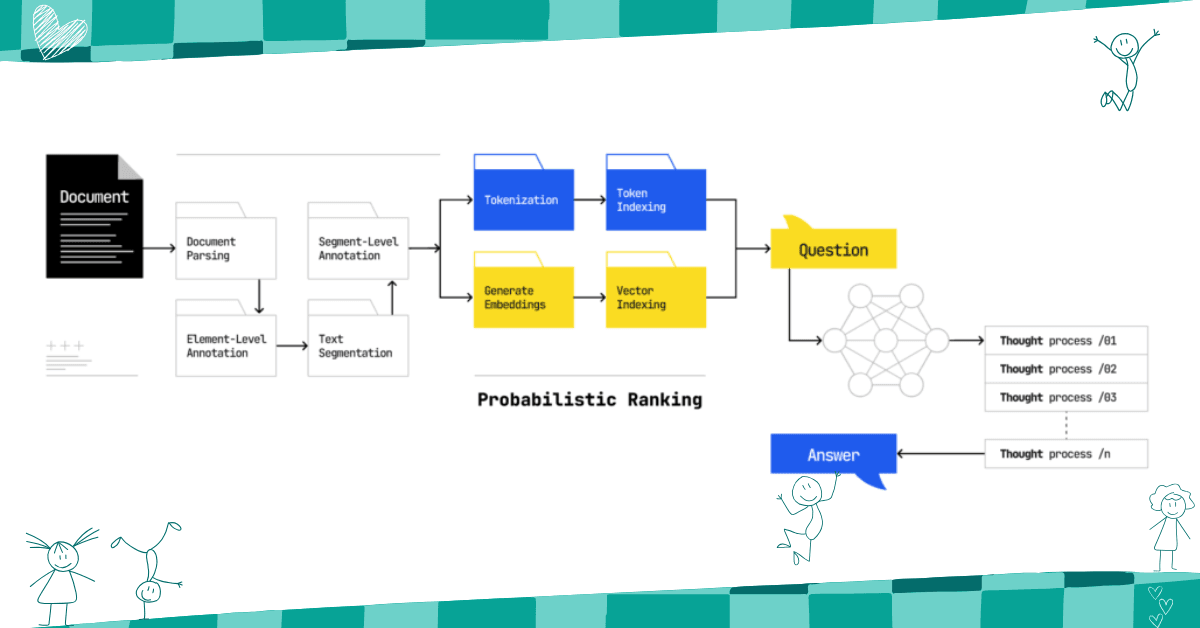

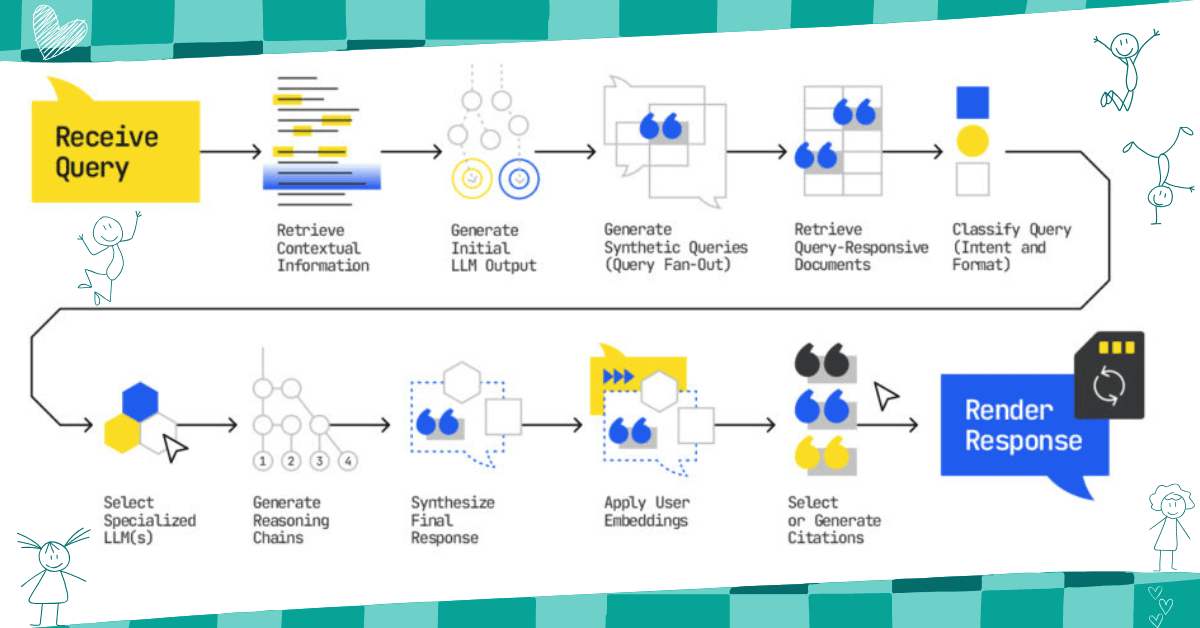

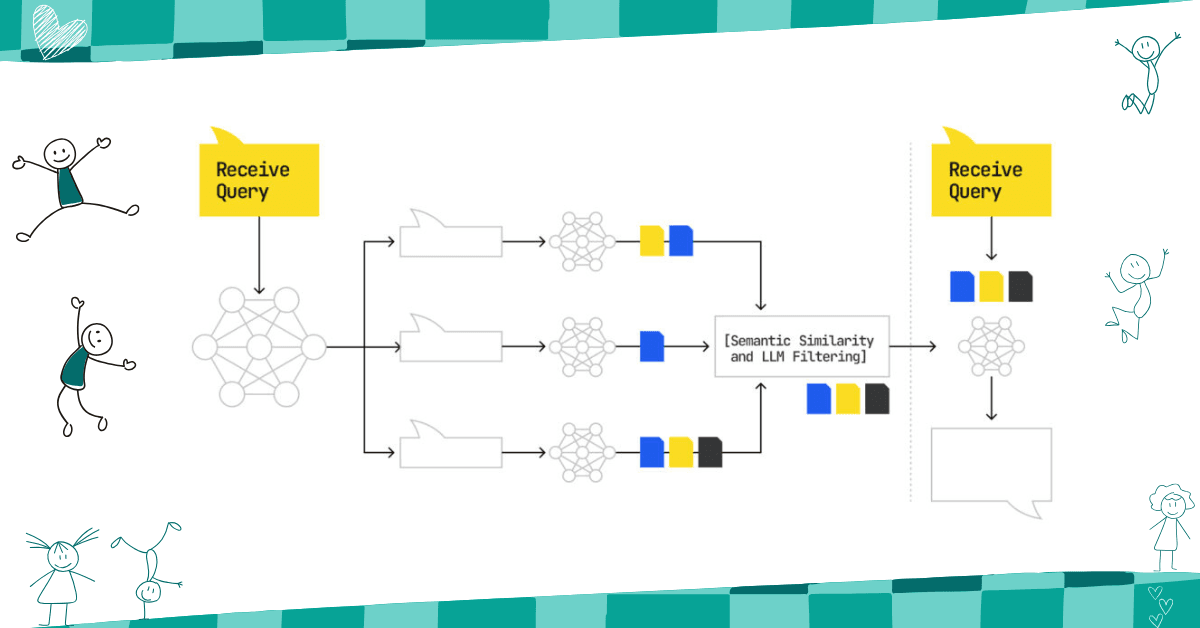

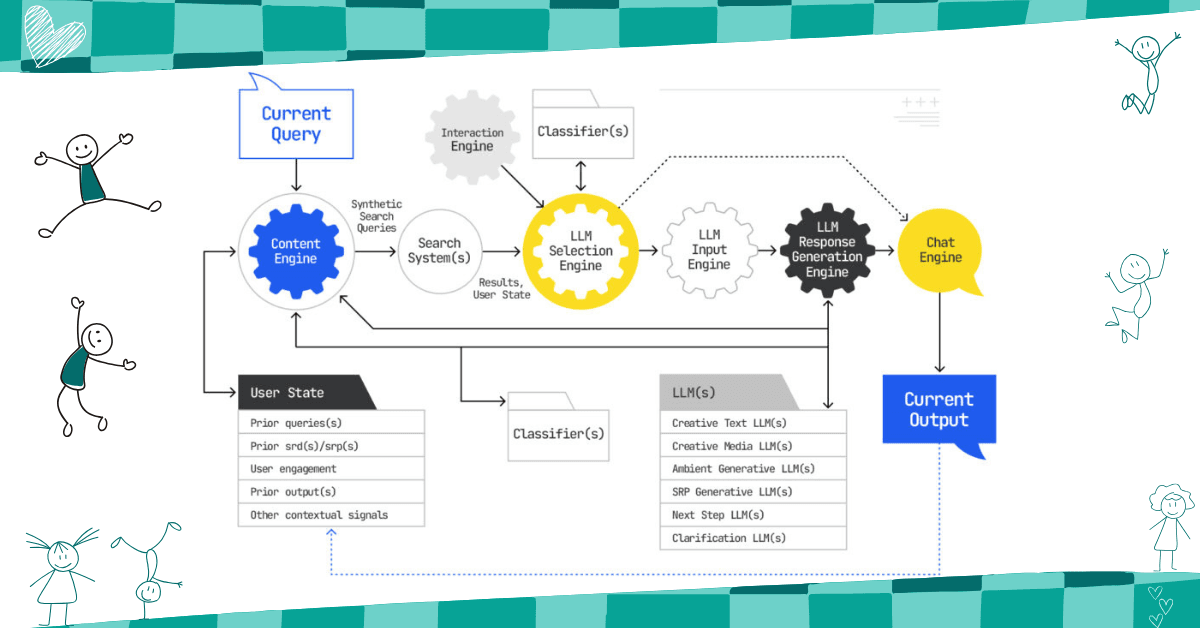

Beneath the surface, what looks like a single answer is actually a ballet of machine thinking. The user enters a query, and Google does not just search for pages, but generates sub-queries (query fan-out). Each sub-query is processed separately, extracting relevant passages from the index.

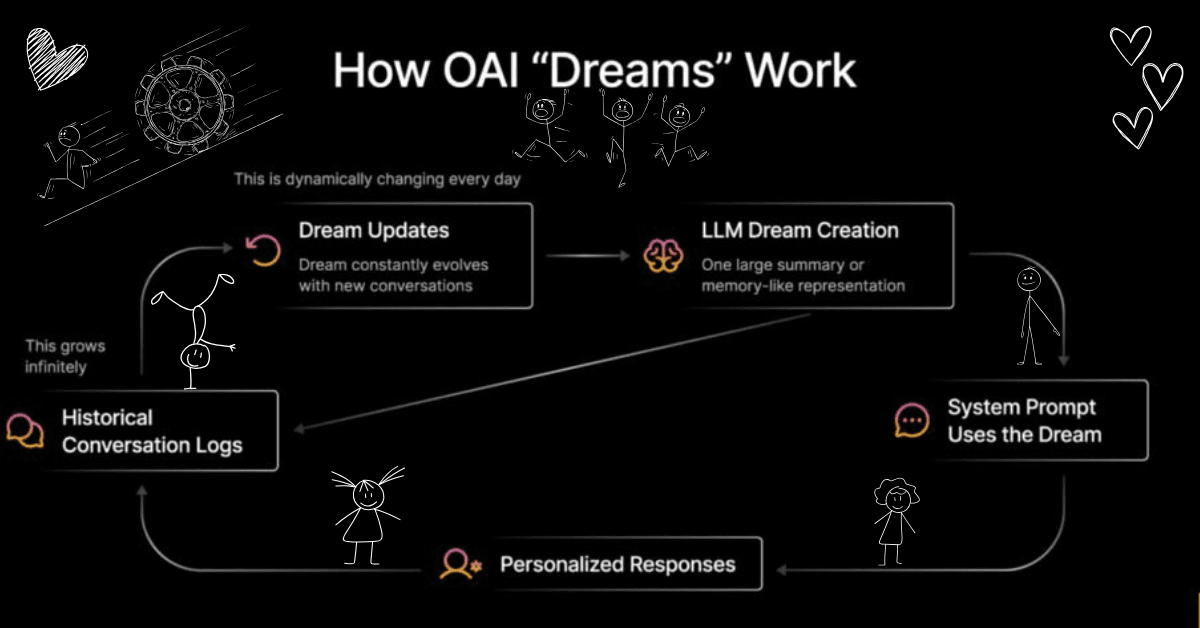

“Stateful chat means that Google builds up a background memory of you over time. This memory is aggregated embeds that represent past conversations, topics you’ve been interested in, and your search patterns. This memory is accumulated and used to generate responses that become more personalized over time. The query is no longer just “What is the best electric SUV?” but: “What does ‘best’ mean to this user right now, given their priorities?”

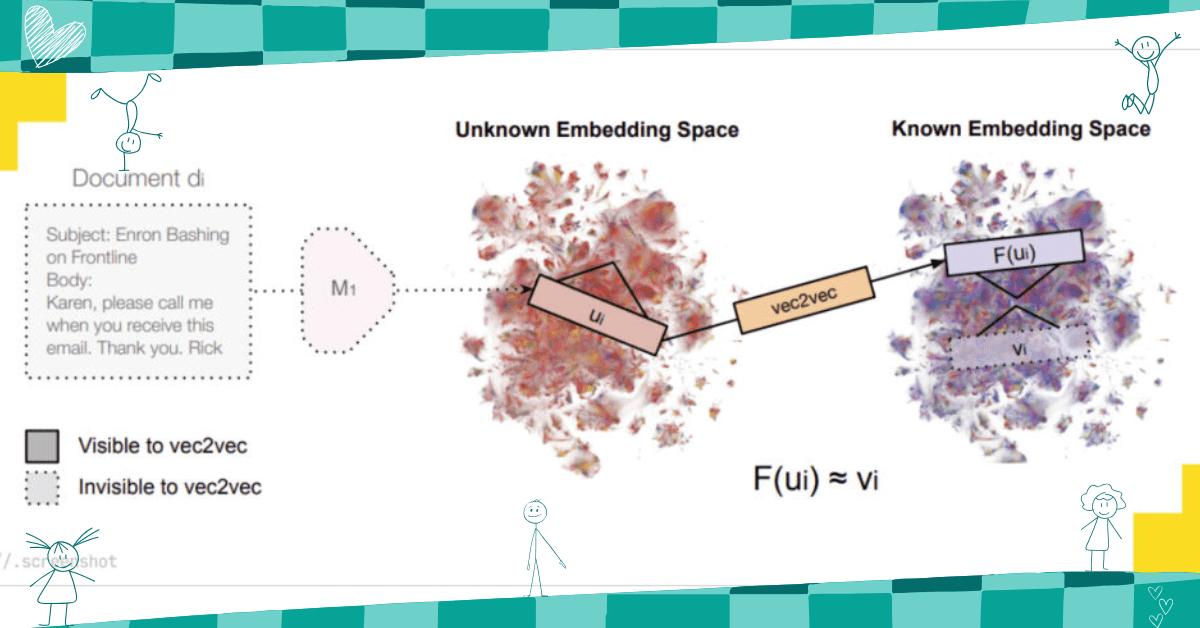

AI Mode personalizes responses using User Embeddings, described in the patent application “User Embedding Models for Personalization of Sequence Processing Models.” This personalization allows Google to tailor AI Mode answers to each user without retraining the underlying LLM.

AI Mode is a multi-level architecture built on the classic Google index. Instead of processing each query in isolation, the system stores the user’s context: previous queries, location, devices, behavioral signals. All this is transformed into a vector embedding that allows Google to understand intent not only “now” but also in dynamics.

How the answer is formed:

When a custom corpus is generated, AI Mode calls a set of specialized large language models (LLMs). Each of them has its own purpose, which depends on the type of query and the user’s intended need (e.g., generalizer, comparator, validation model). The final model assembles a “script” of the answer from them.

Important: Although the patent mentions LLM, it is not a classic MoE (Mixture of Experts) architecture with a single router. Instead, there is a selective orchestration of models depending on the context and intent. It’s more like a smart “middleware” platform than one big model.

Google is no longer limited to a single user query. AI Mode uses the query fan-out technique, when a single query is decomposed into dozens of sub-queries. This is a change in the entire platform, where more and more external context is taken into account. DeepSearch is actually an extended version of DeepResearch integrated into SERPs, where hundreds of queries can be run and thousands of documents analyzed.

AI Mode uses the query fan-out technique, when a single query is decomposed into dozens of sub-queries:

For example, the query “best electric SUV” is transformed into: “Tesla Model X review”, “comparison of Hyundai Ioniq 5 and Mustang Mach-E”, “electric cars with 3 rows of seats for a family”, etc. Each of these sub-questions has its own fragment of answers.

Each sub-query is processed separately, and these are the fragments that can be extracted from your site, even if you are not in the top for the main key. This means that search becomes generative, personalized, and logically structured. Your web page may or may not be cited. Your content may appear not because of a keyword, but because one sentence coincided with some step in machine reasoning.

Google no longer just selects pages – it analyzes, reasoning, and forms the logic of the answer. In the reasoning model, each step of the answer is built as a chain-of-thought:

The LLM (large language model) thinks, forms a reasoning chain, and selects the best parts. This magic happens in an invisible architecture fueled by your past.

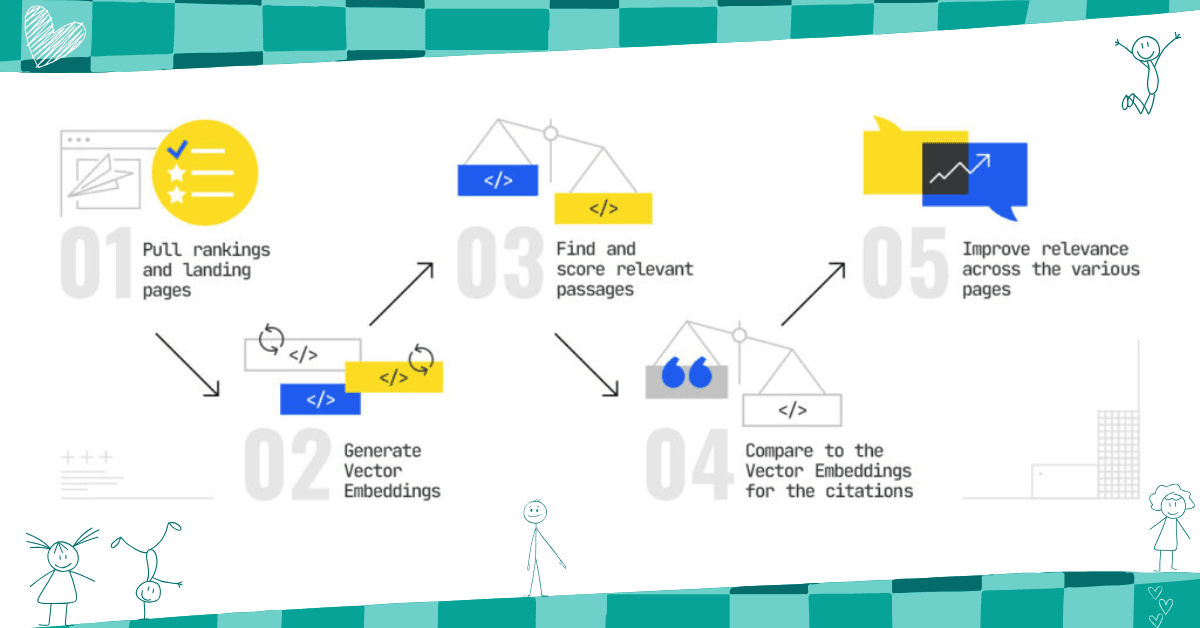

Earlier, we optimized pages. Now we need to optimize fragments within the page. AI Mode evaluates individual passages rather than the entire page:

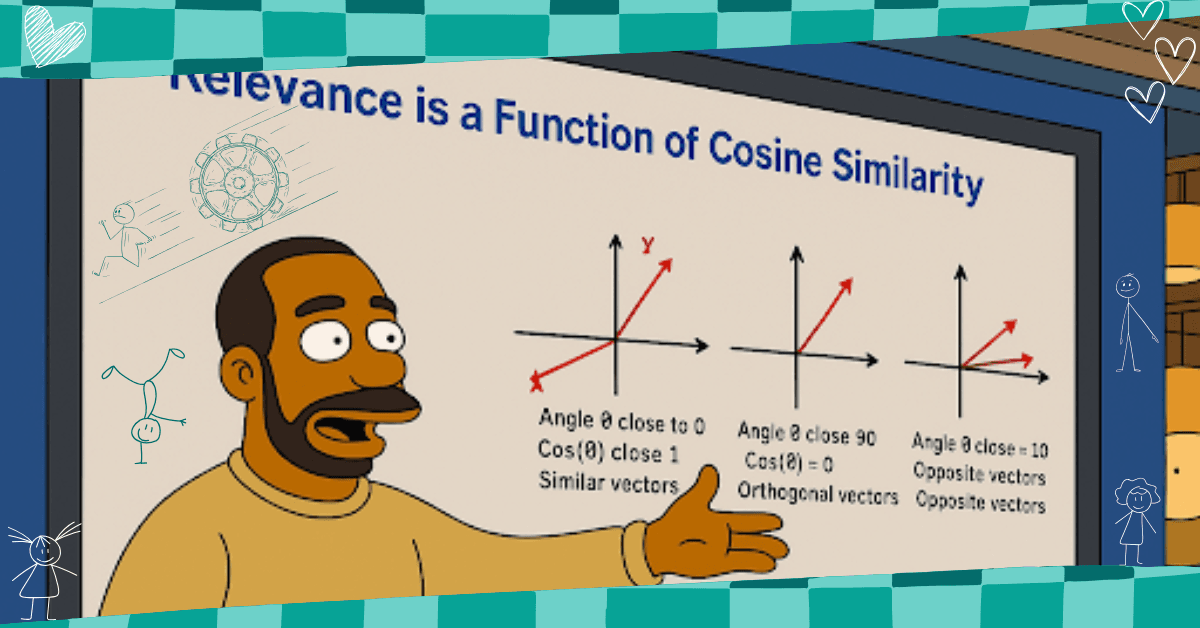

Your content must be ready to support AI reasoning. If it is vague, contradictory, or does not answer fan-out queries, it will simply not be included in the answer. AI Mode uses dense retrieval and passage-level semantics. The whole system works on the basis of dense retrieval. Each query, subquery, document, and even a single passage of text is converted into a vector representation.

According to the patent “Method for Text Ranking with Pairwise Ranking Prompting,” Google has developed a system in which LLM compares two pieces of text and determines which one is more relevant to the user’s query. This process is repeated for many pairs of snippets, and then the results are aggregated to form a final ranking. This is a shift from an absolute relevance score to a relative, probabilistic score.

In practice, it looks like this:

It is not enough to be ranked. You need to create snippets that can win a direct LLM comparison. Because reasoning means that Gemini draws a series of logical conclusions based on the history of user interactions (memory).

SEO has spent 25 years preparing content for indexing and ranking for a specific query. Now we create relevance that should penetrate the reasoning systems for a whole range of queries.

AI Mode introduces new mechanisms that we don’t have and are unlikely to have access to, which makes search probabilistic. So even if we create technically correct websites, write content, and build link mass, this will be only one of many inputs that may not be visible in the final result at all.

To create AI Mode-ready content, you need to:

Traditional positional SEO doesn’t work anymore. Your site can be ranked in the 1st place, but not be selected as a snippet. And vice versa – it may not be in the top, but be selected as a snippet. The current SEO model no longer fits the new reality where everything is driven by reasoning, personal context, and DeepResearch. Google is not trying to provide you with traffic, it wants people to get answers to their information queries, and it sees traffic as a “necessary evil”.

New metrics for success in generative search:

Personalization through embedding profiles means that the results depend on the user’s context. Two users asking the same question may get different answers. AI Mode is now not just about understanding intent, but about a memorable environment.

AI Mode personalizes responses with User Embeddings. This allows Google to tailor AI Mode responses to each user without retraining the underlying LLM. User embedding is used during query interpretation, fan-out query generation, snippet extraction, and answer synthesis.

Use Perplexity as a trainer and a test zone. It’s the only platform that allows you to see how an LLM chooses content and why.

Commercial niches are also under attack: even in finance, e-commerce, and medicine, Google generates answers based on reasoning. Google is already implementing custom data visualization right in the SERPs – based on your data. And if content can already be mixed on the fly with Veo or Imagen, then there is no doubt that full-fledged content remixing is on the way.

The multimodal future of search means that AI Mode is inherently multimodal. The system can work with video, audio and their transcripts, and images. It uses the Multitask Unified Model (MUM) architecture, which allows, for example, to take content in one language, translate it into another, and include it in the response.

Authority is now at the level of fragments: not only is the credibility of the site important, but also the quality of a particular paragraph. This is especially critical for YMYL (Your Money Your Life) content, where accuracy, expertise, and trust are paramount.

Although Google has been warning against optimizing for bots for years, the new reality literally forces us to treat bots as the main “consumers” of content. Bots are now interpreting information on our behalf. That is, James Cadwallader ‘s thesis boils down to the fact that very soon the user will not see your site at all. Agents will process your information based on their understanding of the user and their own “thinking” about your message. This emphasizes the critical role of authority and accuracy of each piece of content.

We are at a crossroads. Traditional SEO still works, but it’s not going to work anymore. The era of semantics, logic, fragments, and vectors is ahead. AI Mode is a challenge, but also an opportunity for those who can think strategically, build deep content, and are not afraid to adapt.

There is a deep gap between what is technically required to succeed in generative IR and what the SEO industry is actually doing today. Most SEO tools are still based on sparse retrieval models instead of dense retrieval models that use vector embeddings.

We do not have the tools that:

It’s time to re-energize our community, to start experimenting and learning again. Those of us who can start by understanding how the technology works, and only then turn to strategy and tactics.

The entire AI Mode system works on the basis of dense search, where each query, sub-query, document, and individual piece of text is converted into a vector representation. Google calculates the similarity between these vectors to determine what will be used in the generative answer.

This means that it is no longer just about “getting into the search results,” but about how well your document or even a single paragraph semantically matches the hidden set of queries.

The search has changed. Now it’s our turn.

Yes, Google still indexes web pages. AI Mode does not replace indexing; on the contrary, it actively uses it. Google extracts fragments of answers from the index, and the so-called “custom corpus” is formed from indexed documents that are semantically relevant to hidden subqueries.

However, it is worth remembering that indexing is only the first step. For your content to be included in the AI Mode response, it needs to pass several more “filters”:

Featured Snippets can be considered the forerunner of AI Overviews. Their fate in the new era is ambiguous:

According to current observations, Featured Snippets still exist, but:

Authority remains important, but its focus is shifting. Now it is mostly relevant at the level of the source of a particular fragment, not just the domain as a whole.

The LLM selects fragments that:

Google’s patents mention “quality scoring” for snippets, which is a separate metric for passage that can be higher or lower than the average score for the page or site as a whole. This means that even if your domain has high authority, the quality and accuracy of individual pieces of your content is critical.

Thus, AI Mode is already active in commercial niches and its influence will only grow. Google is actively testing and implementing AI Overviews in the following areas:

However, there are important nuances, especially for high-risk niches (YMYL – Your Money Your Life):

Traditional positional SEO no longer works the way it used to. Your site can be ranked in the 1st place, but not get into AI Mode, or vice versa – not be in the top, but be selected as a fragment. Therefore, new metrics are added to the usual ones, more relevant for generative search:

SEO reporting is also transforming, as the focus shifts from abstract positions to actual relevance and citations. Now it is necessary to track and show clients not only the dynamics of keyword positions (which remains basic, but less critical), but also:

This requires a revision of the usual dashboards and the introduction of new analytics tools capable of working with vector data and analyzing generative results.

To ensure that your content has a high chance of being used in a generated AI Mode response, you need to change the way you create and optimize it. Here are the key steps:

In the era of generative search, traditional SEO tools are adapting, but there is a need for new solutions focused on AI Mode. Here are the tools you should be monitoring and using:

Tools that are already evolving/showing potential:

Additional tools and approaches that go beyond traditional SEO:

Adapting to the new reality of search will require SEO specialists not only to update their knowledge but also to master new tools and approaches to data analysis.